SMS Blog

Centralize Your AI Usage

As AI adoption expands across teams and domains, organizations increasingly rely on multiple models from different providers, each optimized for specific use case. This flexibility enables the development of high-quality, tailored applications, but it also introduces significant operational complexity. For example, a single company might use OpenAI via Azure for generating marketing content, Claude for summarizing customer support tickets, and a custom model hosted on AWS for forecasting. Each of these applications interacts with distinct APIs, logging mechanisms, and cost structures, making it difficult to manage operations, security, and compliance in a consistent and scalable manner.

In this blog, we’ll discuss these challenges in detail and how we addressed them to reduce operational overhead. Our goal is to help teams remain agile in a rapidly evolving AI landscape, enabling them to use any approved AI model provider while maintaining consistent security, cost visibility, and governance.

AI’s “Universal Plug”

At SMS, we are actively investing in AI to enhance our internal processes, improve efficiency, boost productivity, and reduce costs. As we develop AI-powered internal applications, we began to notice the following recurring challenges and requirements across use cases.

- Model Flexibility is Essential We quickly realized that no single model or provider works best for every use case. Each task often benefits from a different state-of-the-art model, and the “best” model can shift rapidly as new advances are made. To get the most value from our AI efforts, we needed our applications to remain flexible to switch between models and providers without significant rework.

- Security is Non-Negotiable While we want teams to have access to trusted AI providers tailored to their needs, this flexibility cannot compromise our security policies or AI guardrails. We also want a consistent security posture across all AI applications. For example, if a security issue is identified in one use case, fixes and guardrails should be applied across all applications immediately.

- Centralized Observability To manage and monitor AI usage effectively, we require standardized logging across all applications. This includes capturing latency and error rates in a consistent format enabling better auditing and debugging. For instance, if a model starts returning unexpected results, we want to trace the issue across all applications using that model.

- Resilience Through Failovers We’ve experienced provider outages that caused downstream disruptions. To ensure reliability, our AI applications need built-in failover mechanisms so that if one provider is unavailable, queries can be routed to a fallback provider without user impact.

- Easily Adopt Frequently Changing SDKs Keeping up with different SDKs, API versions, and documentation across multiple providers is time-consuming. As the AI ecosystem evolves rapidly, we need a solution that minimizes this maintenance overhead.

- Centralized Cost and Usage Tracking With multiple teams and applications leveraging different models and providers, visibility into usage and associated costs is critical. A centralized dashboard for monitoring helps ensure cost efficiency and accountability across the organization.

Let’s explore our setup in detail to understand how we have solved these problems in our infrastructure.

Our Setup

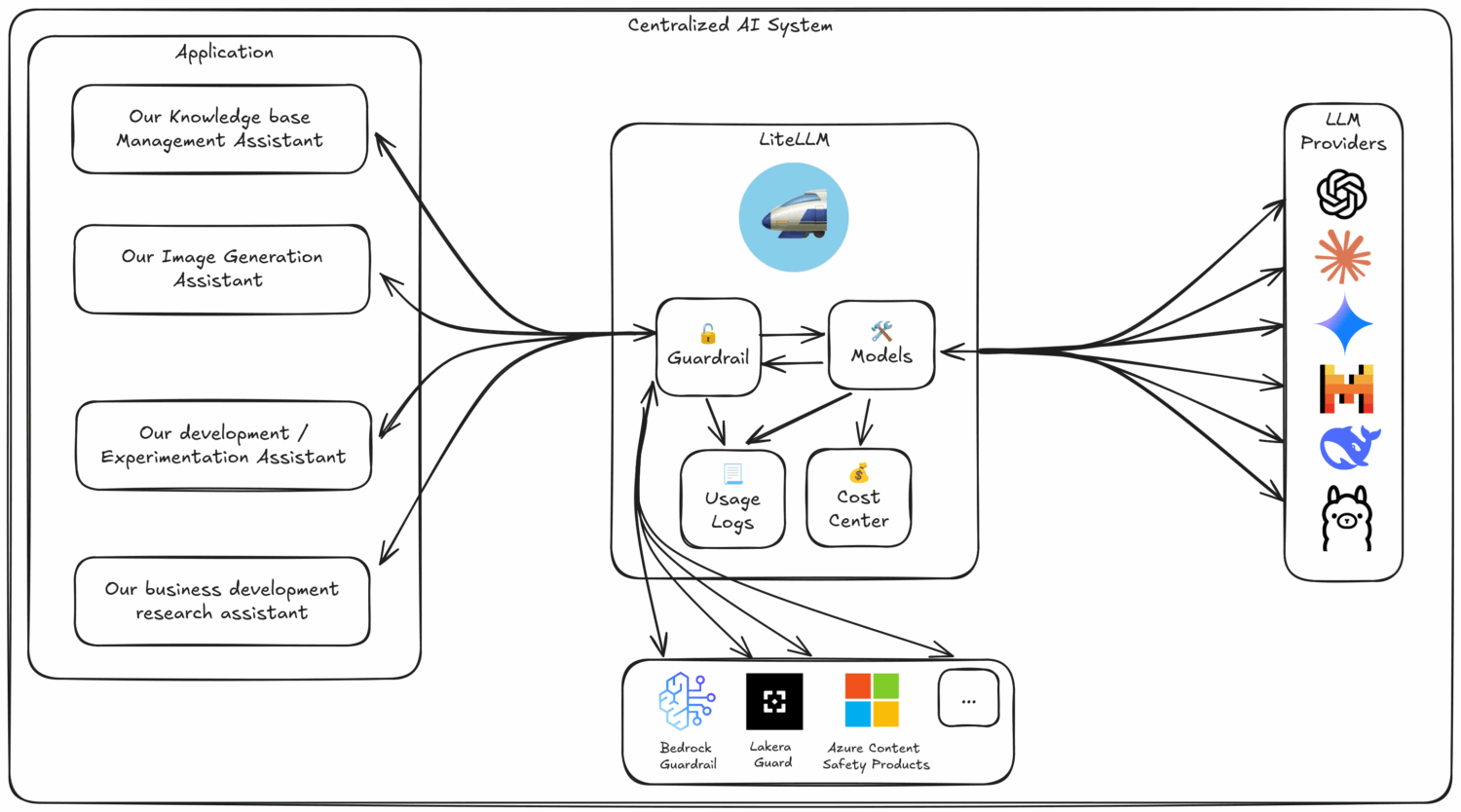

We have adopted LiteLLM as the backbone of our AI stack. It provides a unified and secured interface for integrating with multiple models and providers ensuring our AI applications remain flexible, scalable, and governed. All application requests are routed through LiteLLM instead of going directly to model providers. LiteLLM acts as a central proxy that adds important capabilities such as security guardrails, usage logging, and cost tracking. This setup allows us to apply consistent policies across applications without needing to duplicate logic in each one.

Every request through LiteLLM logs key metadata, including the model used, request cost, originating application, and success or failure details. This gives us clear visibility into AI usage and spending across teams, while ensuring all applications benefit from shared security and operational controls.

Here is a visual representation of our infrastructure:

The Benefits

Adopting LiteLLM as our central AI gateway has transformed how we build, secure, and manage AI-powered applications. Here are the most noteworthy benefits:

- One Unified API: All applications communicate with a single endpoint, regardless of the provider or model behind the scenes. That means:

- Switching models requires minimal changes to application code. We can swap providers or experiment with new models without major rewrites, letting us choose the best tool for each task.

- Developers no longer need to learn multiple SDKs or handle provider-specific authentication and quirks allowing us to develop applications faster. Additionally, with a single SDK for all providers, building failover protection with another model provider is a trivial task.

- Centralized Guardrails: Moderation, safety filters, and policy enforcement happen in one place, ensuring consistent security across all applications. For example, if we do not want AI to discuss a certain topic, we can create a guardrail rule in LiteLLM and all applications immediately start following the central policy without developers having to update each application separately.

- Centralized Logs and Metrics: Every request is logged with model, cost, application, and outcome data, giving us complete visibility for budgeting, billing, and debugging. This allows us to properly plan and ensure critical information is readily available during auditing.

The Challenges

While LiteLLM has delivered significant benefits, centralizing AI traffic also introduces some trade‑offs and considerations. We’ve addressed these with careful design, but they are worth noting:

- Single Point of Failure: Routing all AI requests through LiteLLM creates a potential bottleneck. If our LiteLLM instance stops working, all our applications will stop working as well. This will cause multiple teams to be affected at once.Mitigation Technique: We can run multiple LiteLLM instances behind a load balancer, with health checks and automated failover to ensure high availability.

- Additional Infrastructure Layer: LiteLLM becomes another service to deploy, monitor, and scale. Adding or removing high‑traffic applications can impact performance.Mitigation Technique: We can containerize LiteLLM, automate scaling, and monitor request volumes so we can adjust resources proactively.

- Latency Overhead: The proxy layer adds a small delay to requests. All of the requests first need to go to LiteLLM instead of directly going to the LLM provider. LLM applications are already considerably slow for processing.Mitigation Technique: We can keep LiteLLM deployments close to our applications (i.e same AWS region), enable connection pooling, and cache results for certain repeated queries.

- Configuration Management Complexity: As more models and teams are added, the configuration can become hard to manage.Mitigation Technique: We can store all LiteLLM configs in version control, enforce reviews for changes, and tag each configuration with metadata for auditing.

Conclusion

As AI adoption accelerates, organizations inevitably face growing complexity with more providers, more models, and more operational overhead. That complexity does not have to slow you down. LiteLLM enables you to centralize your AI stack while maintaining the flexibility to choose the best model for each task. With a single API, consistent logging, cost visibility, and provider independence, you can scale AI usage without being locked into a single ecosystem.

LiteLLM is not the only path to achieving this. Alternatives such as moving AI workflows into a central Langfuse instance or using SaaS platforms like airia.com can also deliver similar benefits by combining observability, governance, and guardrails. We will explore these tools and architectures in future blogs, so stay tuned.

If you are looking to introduce AI into your infrastructure securely, efficiently, and at scale, our team can help. Contact us at [email protected] to discuss how we can accelerate your AI journey.